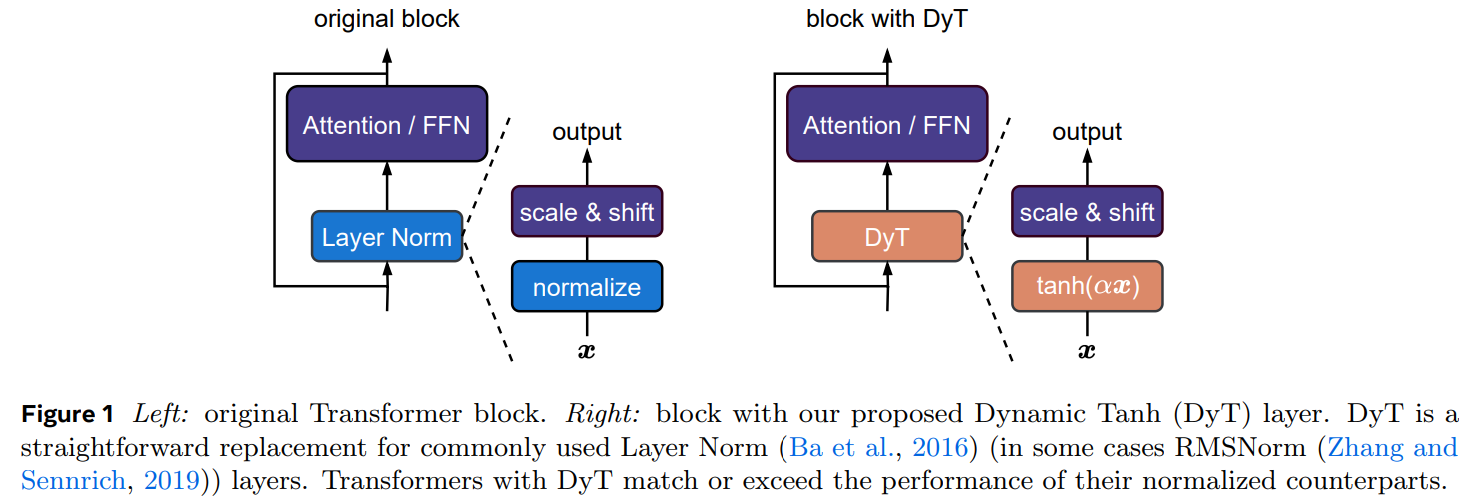

Dynamic Tanh DyT: A Simplified Alternative to Normalization in Transformers

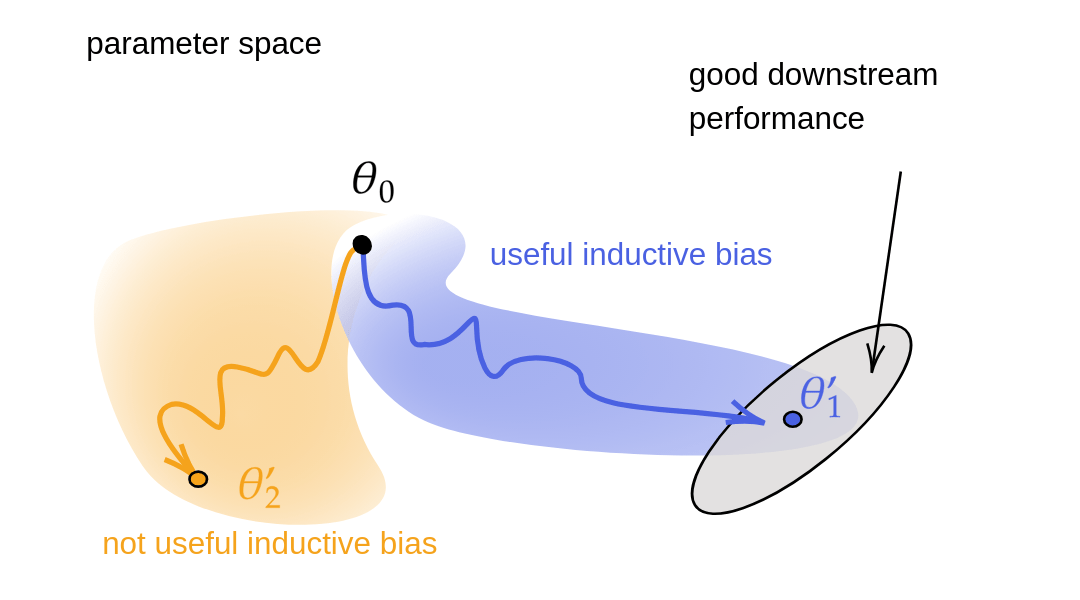

Normalization layers have become fundamental components of modern neural networks, significantly improving optimization by stabilizing gradient flow, reducing sensitivity to weight initialization, and smoothing the loss landscape. Since the introduction of batch normalization in 2015, various normalization techniques have been developed for different architectures, with layer normalization (LN) becoming particularly dominant in Transformer models. Their

Read More »