In his famous blog post Artificial Intelligence — The Revolution Hasn’t Happened Yet, Michael Jordan (the AI researcher, not the one you probably thought of first) tells a story about how he might have almost lost his unborn daughter due to a faulty AI prediction. He speculates that many children die needlessly each year in the same way. Abstracting away the specifics of his case, this is one example of an application in which an AI algorithm’s performance looked good on paper during its development but led to bad decisions once deployed.

In our paper Bayesian Deep Learning is Needed in the Age of Large-Scale AI, we argue that the case above is not the exception but rather the rule and a direct consequence of the research community’s focus on predictive accuracy as a single metric of interest.

Our position paper was born out of the observation that the annual Symposium on Advances of Approximate Bayesian Inference, despite its immediate relevance to these questions, attracted fewer junior researchers over the years. At the same time, many of our students and younger colleagues seemed unaware of the fundamental problems with current practices in machine learning research—especially when it comes to large-scale efforts like the work on foundation models, which grab most of the attention today but fall short in terms of safety, reliability, and robustness.

We reached out to fellow researchers in Bayesian deep learning and eventually assembled a group of researchers from 29 of the most renowned institutions around the world, working at universities, government labs, and industry. Together, we wrote the paper to make the case that Bayesian deep learning offers promising solutions to core problems in machine learning and is ready for application beyond academic experiments. In particular, we point out that there are many other metrics beyond accuracy, such as uncertainty calibration, which we have to take into account to ensure that better models also translate to better outcomes in downstream applications.

In this commentary, I will expand on the importance of decisions as a goal for machine learning systems, in contrast to singular metrics. Moreover, I will make the case for why Bayesian deep learning can satisfy these desiderata and briefly review recent advances in the field. Finally, I will provide an outlook for the future of this research area and give some advice on how you can already use the power of Bayesian deep learning solutions in your research or practice today.

Machine learning for decisions

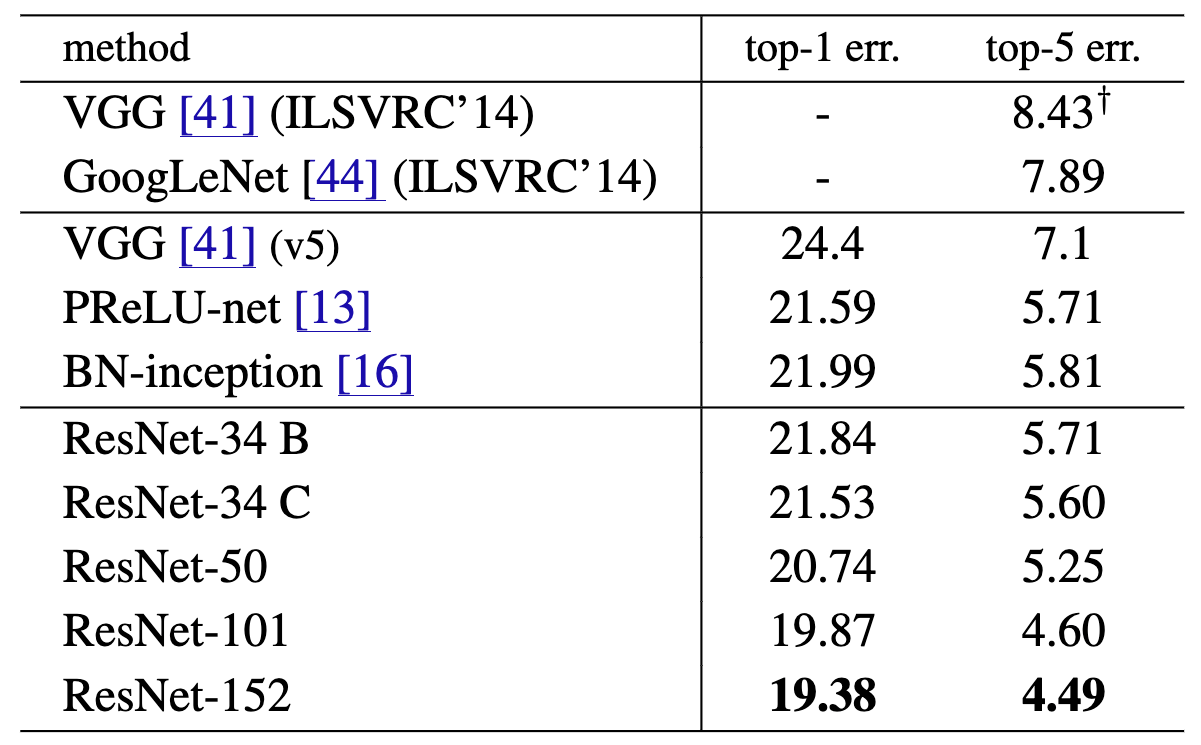

If you open any machine learning research paper presented at one of the big conferences, chances are that you will find a big table with a lot of numbers. These numbers usually reflect the predictive accuracy of different methods on different datasets, and the line corresponding to the authors’ proposed method probably has a lot of bold numbers, indicating that they are higher than the ones of the other methods.

Based on this observation, one might believe that bold numbers in tables are all that matters in the world. However, I want to strongly argue that this is not the case. What matters in the real world are decisions—or, more precisely, decisions and their associated utilities.

A motivating example

Imagine you overslept and are now running the risk of getting late to work. Moreover, there is a new construction site on your usual route to work, and there is also a parade going on in town today. This makes the traffic situation rather hard to predict. It is 08:30 am, and you have to be at work by 09:00. There are three different routes you can take: through the city, via the highway, or through the forest. How do you choose?

Luckily, some clever AI researchers have built tools that can predict the time each route takes. There are two tools to choose from, Tool A and Tool B, and these are their predictions:

Annoyingly, Tool A suggests that you should use the highways, but Tool B suggests the city. However, as a tech-savvy user, you actually know that B uses a newer algorithm, and you have read the paper and marveled at the bold numbers. You know that B yields a lower mean-squared error (MSE), a common measure for predictive performance on regression tasks.

Confidently, you choose to trust Tool B and thus take the route through the city—just to arrive at 09:02 and get an annoyed side-glance from your boss for being late.

But how did that happen? You chose the best tool, after all! Let’s look at the ground-truth travel times:

As we can see, the highway was actually the fastest one and, in fact, the only one that would have gotten you to work on time. But how is that possible? This will become clear when we compute the MSE in these times for the two predictive algorithms:

MSE(A) = [ (35-32)² + (25-25)² + (43-35)²] / 3 = 24.3

MSE(B) = [ (28-32)² + (32-25)² + (35-35)²] / 3 = 21.7

Indeed, we see that Tool B has the better MSE, as advertised in the paper. But that didn’t help you now, did it? What you ultimately cared about was not having the most accurate predictions across all possible routes but making the best decision regarding which route to take, namely the decision that gets you to work in time.

While Tool A makes worse predictions on average, its predictions are better for routes with shorter travel times and get worse the longer a route takes. It also never underestimates travel times.

To get to work on time, you don’t care about the predictions for the slowest routes, only about the fastest ones. You’d also like to have the confidence to arrive on time and not choose a route that then actually ends up taking longer. Thus, while Tool A has a worse MSE, it actually leads to better decisions.

Uncertainty estimation to the rescue

Of course, if you had known that the prediction could have been so wrong, you might have never trusted it in the first place, right? Let’s add another useful feature to the predictions: uncertainty estimation.

Here are the original two algorithms and a new third one (Tool C) that estimates its own predictive uncertainties:

The ranking based on mean predictions of Tool C agrees with Tool B. However, you can now assess how much risk there is that you run late to work. Your true utility is not to be at work in the shortest time possible but to be at work on time, i.e., within a maximum of 30 min.

According to Tool C, the drive through the city can take between 17 and 32 min, so while it seems to be the fastest on average, there is a chance that you will be late. In contrast, the highway can take between 25 and 29 min, so you will be on time in any case. Armed with these uncertainty estimates, you’d make the correct choice of choosing the highway.

This was just one example of a scenario in which we are faced with decisions whose utility does not correlate with an algorithm’s raw predictive accuracy, and uncertainty estimation is crucial to making better decisions.

The case for Bayesian deep learning

Bayesian deep learning uses the foundational statistical principles of Bayesian inference to endow deep learning systems with the ability to make probabilistic predictions. These predictions can then be used to derive uncertainty intervals of the form shown in the previous example (which a Bayesian would call “credible intervals”).

Uncertainty intervals can encompass aleatoric uncertainty, that is, the uncertainty inherent in the randomness of the world (e.g., whether your neighbor decided to leave the car park at the same time as you), and epistemic uncertainty, related to our lack of knowledge (e.g., we might not know how fast the parade moves).

Crucially, by applying Bayes’ theorem, we can incorporate prior knowledge into the predictions and uncertainty estimates of our Bayesian deep learning model. For example, we can use our understanding of how traffic flows around a construction site to estimate potential delays.

Frequentist statisticians will often criticize this aspect of Bayesian inference as “subjective” and will advocate for “distribution-free” approaches, such as conformal prediction, which give you provable guarantees for the coverage of the prediction intervals. However, these guarantees only hold uniformly across all the predictions (in our example, across all the routes), but not necessarily in any given case.

As we have seen in our example, we don’t care that much about the accuracy (and, in extension, uncertainty estimates) on the slower routes. As long as the predictions and uncertainty estimates for the fast routes are accurate, a tool serves its purpose. Conformal methods cannot provide such a marginal coverage guarantee for each route, limiting their applicability in many scenarios.

“But Bayesian deep learning doesn’t work”

If you have only superficially followed the field of Bayesian deep learning a few years ago and have then stopped paying attention, distracted by all the buzz around LLMs and generative AI, you would be excused in believing that it has elegant principles and a strong motivation, but does not actually work in practice. Indeed, this truly was the case until only very recently.

However, in the last few years, the field has seen many breakthroughs that allow for this framework to finally deliver on its promises. For instance, performing Bayesian inference on posterior distributions over millions of neural network parameters used to be computationally intractable, but we now have scalable approximate inference methods that are only marginally more costly than standard neural network training.

Moreover, it used to be hard to choose the right model class for a given problem, but we have made great progress in automating this decision away from the user thanks to advances in Bayesian model selection.

While it is still nearly impossible to design a meaningful prior distribution over neural network parameters, we have found different ways to specify priors directly over functions, which is much more intuitive for most practitioners. Finally, some troubling conundra related to the behavior of the Bayesian neural network posterior, such as the infamous cold posterior effect, are much better understood now.

Armed with these tools, Bayesian deep learning models have then started to have a beneficial impact in many domains, including healthcare, robotics, and science. For instance, we have shown that in the context of predicting health outcomes for patients in the intensive care unit based on time series data, a Bayesian deep learning approach can not only yield better predictions and uncertainty estimates but also lead to recommendations that are more interpretable for medical practitioners. Our position paper contains detailed accounts of this and other noteworthy examples.

However, Bayesian deep learning is unfortunately still not as easy to use as standard deep learning, which you can do these days in a few lines of PyTorch code.

If you want to use a Bayesian deep learning model, first, you have to think about specifying the prior. This is a crucial component of the Bayesian paradigm and might sound like a chore, but if you actually have prior knowledge about the task at hand, this can really improve your performance.

Then, you are still left with choosing an approximate inference algorithm, depending on how much computational budget you are willing to spend. Some algorithms are very cheap (such as Laplace inference), but if you want really high-fidelity uncertainty estimates, you might have to opt for a more expensive one (e.g., Markov Chain Monte Carlo).

Finally, you have to find the right implementation of that algorithm that also works with your model. For instance, some inference algorithms might only work with certain types of normalization operators (e.g., layer norm vs. batch norm) or might not work with low-precision weights.

As a research community, we should make it a priority to make these tools more easily usable for normal practitioners without a background in ML research.

The road ahead

This commentary on our position paper has hopefully convinced you that there is more to machine learning than predictive accuracies on a test set. Indeed, if you use predictions from an AI model to make decisions, in almost all circumstances, you should care about ways to incorporate your prior knowledge into the model and get uncertainty estimates out of it. If this is the case, trying out Bayesian deep learning is likely worth your while.

A good place to start is the Primer on Bayesian Neural Networks that I wrote together with three colleagues. I’ve also written a review on priors in Bayesian Deep Learning that’s published open access. Once you understand the theoretical foundations and feel ready to get your hands dirty with some actual Bayesian deep learning in PyTorch, check out some popular libraries for inference methods such as Laplace inference, variational inference, and Markov chain Monte Carlo methods.

Finally, if you are a researcher and would like to get involved in the Bayesian deep learning community, especially contributing to the goal of better benchmarking to show the positive impact on real decision outcomes and to the goal of building easy-to-use software tools for practitioners, feel free to reach out to me.

![Understanding LLMs Requires More Than Statistical Generalization [Paper Reflection]](https://exeltek.com.au/wp-content/uploads/2025/03/Inductive-biases-influence-model-performance.png)