Mixture of Experts LLMs: Key Concepts Explained

Mixture of Experts (MoE) is a type of neural network architecture that employs sub-networks (experts) to process specific input parts.

Only a subset of experts is activated per input, enabling models to scale efficiently. MoE models can leverage expert parallelism by distributing experts across multiple devices, enabling large-scale deployments while maintaining efficient inference.

MoE uses gating and load balancing mechanisms to dynamically route inputs to the most relevant experts, ensuring targeted and evenly distributed computation. Parallelizing the expert, along with the data, is key to having an optimized training pipeline.

MoEs have faster training and better or comparable performance than dense LLMs on many benchmarks, especially in multi-domain tasks. Challenges include load balancing, distributed training complexity, and tuning for stability and efficiency.

Scaling LLMs comes at a tremendous computational cost. Bigger models enable more powerful capabilities but require expensive hardware and infrastructure, also resulting in higher latency. So far, we’ve mainly achieved performance gains by making models larger, but this trajectory is not sustainable due to escalating costs, increasing energy consumption, and diminishing returns in performance improvement.

When considering the enormous amount of data and the wide variety of domains in which the huge LLM models are trained, it’s natural to ask —instead of using the entire LLM’s capacity, could we just pick and choose only a portion of the LLM that is relevant to our particular input? This is the key idea behind Mixture of Expert LLMs.

Mixture of Experts (MoE) is a type of neural network architecture in which parts of the network are divided into specialized sub-networks (experts), each optimized for a specific domain of the input space. During inference, only a part of the model is activated depending on the given input, significantly reducing the computational cost. Further, these experts can be distributed across multiple devices, allowing for parallel processing and efficient large-scale distributed setups.

On an abstract, conceptual level, we can imagine MoE experts specialized in processing specific input types. For example, we might have separate experts for different language translations or different experts for text generation, summarization, solving analytical problems, or writing code. These sub-networks have separate parameters but are part of the single model, sharing blocks and layers at different levels.

In this article, we explore the core concepts of MoE, including architectural blocks, gating mechanisms, and load balancing. We’ll also discuss the nuances of training MoEs and analyze why they are faster to train and yield superior performance in multi-domain tasks. Finally, we address key challenges of implementing MoEs, including distributed training complexity and maintaining stability.

Bridging LLM capacity and scalability with MoE layers

Since the introduction of Transformer-based models, LLM capabilities have continuously expanded through advancements in architecture, training methods, and hardware innovation. Scaling up LLMs has been shown to improve performance. Accordingly, we’ve seen rapid growth in the scale of the training data, model sizes, and infrastructure supporting training and inference.

Pre-trained LLMs have reached sizes of billions and trillions of parameters. Training these models takes extremely long and is expensive, and their inference costs scale proportionally with their size.

In a conventional LLM, all parameters of the trained model are used during inference. The table below gives an overview of the size of several impactful LLMs. It presents the total parameters of each model and the number of parameters activated during inference:

The last five models (highlighted) exhibit a significant difference between the total number of parameters and the number of parameters active during inference. The Switch-Language Transformer, Mixtral, GLaM, GShard, and DeepSeekMoE are Mixture of Experts LLMs (MoEs), which require only executing a portion of the model’s computational graph during inference.

MoE building blocks and architecture

The foundational idea behind the Mixture of Experts was introduced before the era of Deep Learning, back in the ’90s, with “Adaptive Mixtures of Local Experts” by Robert Jacobs, together with the “Godfather of AI” Geoffrey Hinton and colleagues. They introduced the idea of dividing the neural network into multiple specialized “experts” managed by a gating network.

With the Deep Learning boom, the MoE resurfaced. In 2017, Noam Shazeer and colleagues (including Geoffrey Hinton once again) proposed the Sparsely-Gated Mixture-of-Experts Layer for recurrent neural language models.

The Sparsely-Gated Mixture-of-Experts Layer consists of multiple experts (feed-forward networks) and a trainable gating network that selects the combination of experts to process each input. The gating mechanism enables conditional computation, directing processing to the parts of the network (experts) that are most suited to each part of the input text.

Such an MoE layer can be integrated into LLMs, replacing the feed-forward layer in the Transformer block. Its key components are the experts, the gating mechanism, and the load balancing.

Experts

The fundamental idea of the MoE approach is to introduce sparsity in the neural network layers. Instead of a dense layer where all parameters are used for every input (token), the MoE layer consists of several “expert” sub-layers. A gating mechanism determines which subset of “experts” is used for each input. The selective activation of sub-layers makes the MoE layer sparse, with only a part of the model parameters used for every input token.

How are experts integrated into LLMs?

In the Transformer architecture, MoE layers are integrated by modifying the feed-forward layers to include sub-layers. The exact implementation of this replacement varies, depending on the end goal and priorities: replacing all feed-forward layers with MoEs will maximize sparsity and reduce the computational cost, while replacing only a subset of feed-forward layers may help with training stability. For example, in the Switch Transformer, all feed-forward components are replaced with the MoE layer. In GShard and GLaM, only every other feed-forward layer is replaced.

The other LLM layers and parameters remain unchanged, and their parameters are shared between the experts. An analogy to this system with specialized and shared parameters could be the completion of a company project. The incoming project needs to be processed by the core team—they contribute to every project. However, at some stages of the project, they may require different specialized consultants, selectively brought in based on their expertise. Collectively, they form a system that shares the core team’s capacity and profits from expert consultants’ contributions.

Gating mechanism

In the previous section, we have introduced the abstract concept of an “expert,” a specialized subset of the model’s parameters. These parameters are applied to the high-dimensional representation of the input at different levels of the LLM architecture. During training, these subsets become “skilled” at handling specific types of data. The gating mechanism plays a key role in this system.

What is the role of the gating mechanism in an MoE layer?

When an MoE LLM is trained, all the experts’ parameters are updated. The gating mechanism learns to distribute the input tokens to the most appropriate experts, and in turn, experts adapt to optimally process the types of input frequently routed their way. At inference, only relevant experts are activated based on the input. This enables a system with specialized parts to handle diverse types of inputs. In our company analogy, the gating mechanism is like a manager delegating tasks within the team.

The gating component is a trainable network within the MoE layer. The gating mechanism has several responsibilities:

- Scoring the experts based on input. For N experts, N scores are calculated, corresponding to the experts’ relevance to the input token.

- Selecting the experts to be activated. Based on the experts’ scoring, a subset of the experts is chosen to be activated. This is usually done by top-k selection.

- Load balancing. Naive selection of top-k experts would lead to an imbalance in token distribution among experts. Some experts may become too specialized by only handling a minimal input range, while others would be overly generalized. During inference, touting most of the input to a small subset of experts would lead to overloaded and underutilized experts. Thus, the gating mechanism has to distribute the load evenly across all experts.

How is gating implemented in MoE LLMs?

Let’s consider an MoE layer consisting of n experts denoted as Experti(x) with i=1,…,n that takes input x. Then, the gating layer’s output is calculated as

where gi is the ith expert’s score, modeled based on the Softmax function. The gating layer’s output is used as the weights when averaging the experts’ outputs to compute the MoE layer’s final output. If gi is 0, we can forgo computing Experti(x) entirely.

The general framework of a MoE gating mechanism looks like

Some specific examples are:

- Top-1 gating: Each token is directed to a single expert when choosing only the top-scored export. This is used in the Switch Transformer’s Switch layer. It is computationally efficient but requires careful load-balancing of the tokens for even distribution across experts.

- Top-2 gating: Each token is sent to two experts. This approach is used in Mixtral.

- Noisy top-k gating: Introduced with the Sparsely-Gated Mixture-of-Experts Layer, noise (standard normal) is added before applying Softmax to help with load-balancing. GShard uses a noisy top-2 strategy, adding more advanced load-balancing techniques.

Load balancing

The straightforward gating via scoring and selecting top-k experts can result in an imbalance of token distribution among experts. Some experts may become overloaded, being assigned to process a bigger portion of tokens, while others are selected much less frequently and stay underutilized. This causes a “collapse” in routing, hurting the effectiveness of the MoE approach in two ways.

First, the frequently selected experts are continuously updated during training, thus performing better than experts who don’t receive enough data to train properly.

Second, load imbalance causes memory and computational performance problems. When the experts are distributed across different GPUs and/or machines, an imbalance in expert selection will translate into network, memory, and expert capacity bottlenecks. If one expert has to handle ten times the number of tokens than another, this will increase the total processing time as subsequent computations are blocked until all experts finish processing their assigned load.

Strategies for improving load balancing in MoE LLMs include:

• Adding random noise in the scoring process helps redistribute tokens among experts.

• Adding an auxiliary load-balancing loss to the overall model loss. It tries to minimize the fraction of the input routed to each expert. For example, in the Switch Transformer, for N experts and T tokens in batch B, the loss would be

where fi is the fraction of tokens routed to expert i and Pi is the fraction of the router probability allocated for expert i.

• DeepSeekMoE introduced an additional device-level loss to ensure that tokens are routed evenly across the underlying infrastructure hosting the experts. The experts are divided into g groups, with each group deployed to a single device.

• Setting a maximum capacity for each expert. GShard and the Switch Transformer define a maximum number of tokens that can be processed by one expert. If the capacity is exceeded, the “overflown” tokens are directly passed to the next layer (skipping all experts) or rerouted to the next-best expert that has not yet reached capacity.

Scalability and challenges in MoE LLMs

Selecting the number of experts

The number of experts is a key consideration when designing an MoE LLM. A larger number of experts increases a model’s capacity at the cost of increased infrastructure demands. Using too few experts has a detrimental effect on performance. If the tokens assigned to one expert are too diverse, the expert cannot specialize sufficiently.

The MoE LLMs’ scalability advantage is due to the conditional activation of experts. Thus, keeping the number of active experts k fixed but increasing the total number of experts n increases the model’s capacity (larger total number of parameters). Experiments conducted by the Switch Transformer’s developers underscore this. With a fixed number of active parameters, increasing the number of experts consistently led to improved task performance. Similar results were observed for MoE Transformers with GShard.

The Switch Transformers have 16 to 128 experts, GShard can scale up from 128 to 2048 experts, and Mixtral can operate with as few as 8. DeepSeekMoE takes a more advanced approach by dividing experts into fine-grained, smaller experts. While keeping the number of expert parameters constant, the number of combinations for possible expert selection is increased. For example, N=8 experts with hidden dimension h can be split into m=2 parts, giving N*m=16 experts of dimension h/m. The possible combinations of activated experts in top-k routing will change from 28 (2 out of 8) to 1820 (4 out of 16), which will increase flexibility and targeted knowledge distribution.

Routing tokens to different experts simultaneously may result in redundancy among experts. To address this problem, some approaches (like DeepSeek and DeepSpeed) can assign dedicated experts to act as a shared knowledge base. These experts are exempt from the gating mechanism, always receiving each input token.

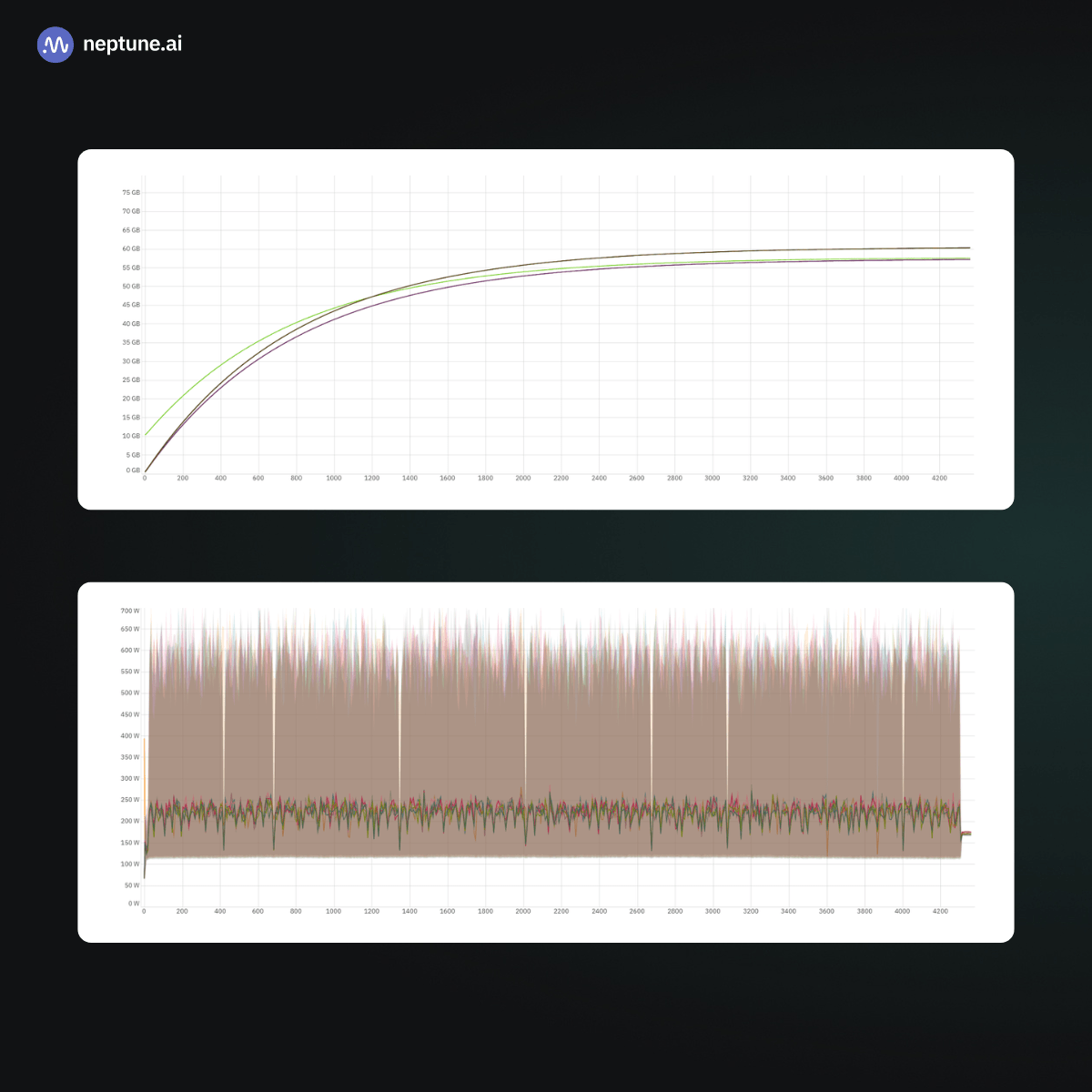

Training and inference infrastructure

While MoE LLMs can, in principle, be operated on a single GPU, they can only be scaled efficiently in a distributed architecture combining data, model, and pipeline parallelism with expert parallelism. The MoE layers are sharded across devices (i.e., their experts are distributed evenly) while the rest of the model (like dense layers and attention blocks) is replicated to each device.

This requires high-bandwidth and low-latency communication for both forward and backward passes. For example, Google’s latest Gemini 1.5 was trained on multiple 4096-chip pods of Google’s TPUv4 accelerators distributed across multiple data centers.

Hyperparameter optimization

Introducing MoE layers adds additional hyperparameters that have to be carefully adjusted to stabilize training and optimize task performance. Key hyperparameters to consider include the overall number of experts, their size, the number of experts to select in the top-k selection, and any load balancing parameters. Optimization strategies for MoE LLMs are discussed comprehensively in the papers introducing the Switch Transformer, GShard, and GLaM.

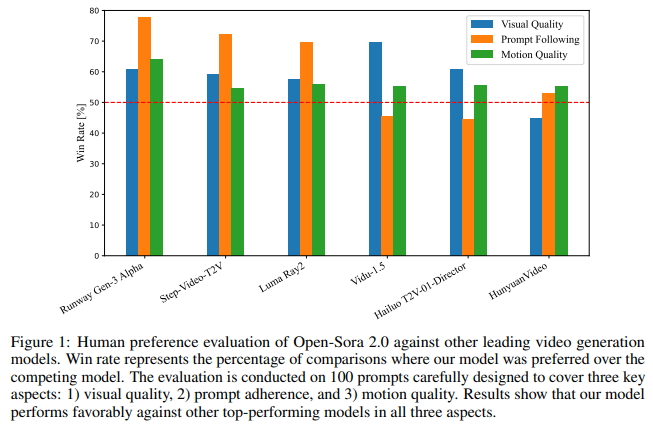

LLM performance vs. MoE LLM performance

Before we wrap up, let’s take a closer look at how MoE LLMs compare to standard LLMs:

- MoE models, unlike dense LLMs, activate only a portion of their parameters. Compared to dense LLMs, MoE LLMs with the same number of active parameters can achieve better task performance, having the benefit of a larger number of total trained parameters. For example, Mixtral 8x7B with 13 B active parameters (and 47 B total trained parameters) matches or outperforms LLaMA-2 with 13 B parameters on benchmarks like MMLU, HellaSwag, PIQA, and Math.

- MoEs are faster, and thus less expensive, to train. The Switch Transformer authors showed, for example, that the sparse MoE outperforms the dense Transformer baseline with a considerable speedup in achieving the same performance. With a fixed number of FLOPs and training time, the Switch Transformer achieved the T5-Base’s performance level seven times faster and outperformed it with further training.

What’s next for MoE LLMs?

Mixture of Experts (MoE) is an approach to scaling LLMs to trillions of parameters with conditional computation while avoiding exploding computational costs. MoE allows for the separation of learnable experts within the model, integrated into the shared model skeleton, which helps the model more easily adapt to multi-task, multi-domain learning objectives. However, this comes at the cost of new infrastructure requirements and the need for careful tuning of additional hyperparameters.

The novel architectural solutions for building experts, managing their routing, and stable training are promising directions, with many more innovations to look forward to. Recent SoTA models like Google’s multi-modal Gemini 1.5 and IBM’s enterprise-focused Granite 3.0 are MoE models. DeepSeek R1, which has comparable performance to GPT-4o and o1, is an MoE architecture with 671B total and 37B activated number of parameters and 128 experts.

With the publication of open-source MoE LLMs such as DeepSeek R1 and V3, which rival or even surpass the performance of the aforementioned proprietary models, we are looking into exciting times for democratized and scalable LLMs.