Essential Review Papers on Physics-Informed Neural Networks: A Curated Guide for Practitioners

Staying on top of a fast-growing research field is never easy.

I face this challenge firsthand as a practitioner in Physics-Informed Neural Networks (PINNs). New papers, be they algorithmic advancements or cutting-edge applications, are published at an accelerating pace by both academia and industry. While it is exciting to see this rapid development, it inevitably raises a pressing question:

How can one stay informed without spending countless hours sifting through papers?

This is where I have found review papers to be exceptionally valuable. Good review papers are effective tools that distill essential insights and highlight important trends. They are big-time savers guiding us through the flood of information.

In this blog post, I would like to share with you my personal, curated list of must-read review papers on PINNs, that are especially influential for my own understanding and use of PINNs. Those papers cover key aspects of PINNs, including algorithmic developments, implementation best practices, and real-world applications.

In addition to what’s available in existing literature, I’ve included one of my own review papers, which provides a comprehensive analysis of common functional usage patterns of PINNs — a practical perspective often missing from academic reviews. This analysis is based on my review of around 200 arXiv papers on PINNs across various engineering domains in the past 3 years and can serve as an essential guide for practitioners looking to deploy these techniques to tackle real-world challenges.

For each review paper, I will explain why it deserves your attention by explaining its unique perspective and indicating practical takeaways that you can benefit from immediately.

Whether you’re just getting started with PINNs, using them to tackle real-world problems, or exploring new research directions, I hope this collection makes navigating the busy field of PINN research easier for you.

Let’s cut through the complexity together and focus on what truly matters.

1️⃣ Scientific Machine Learning through Physics-Informed Neural Networks: Where we are and what’s next

📄 Paper at a glance

🔍 What it covers

- Authors: S. Cuomo, V. Schiano di Cola, F. Giampaolo, G. Rozza, M. Raissi, and F. Piccialli

- Year: 2022

- Link: arXiv

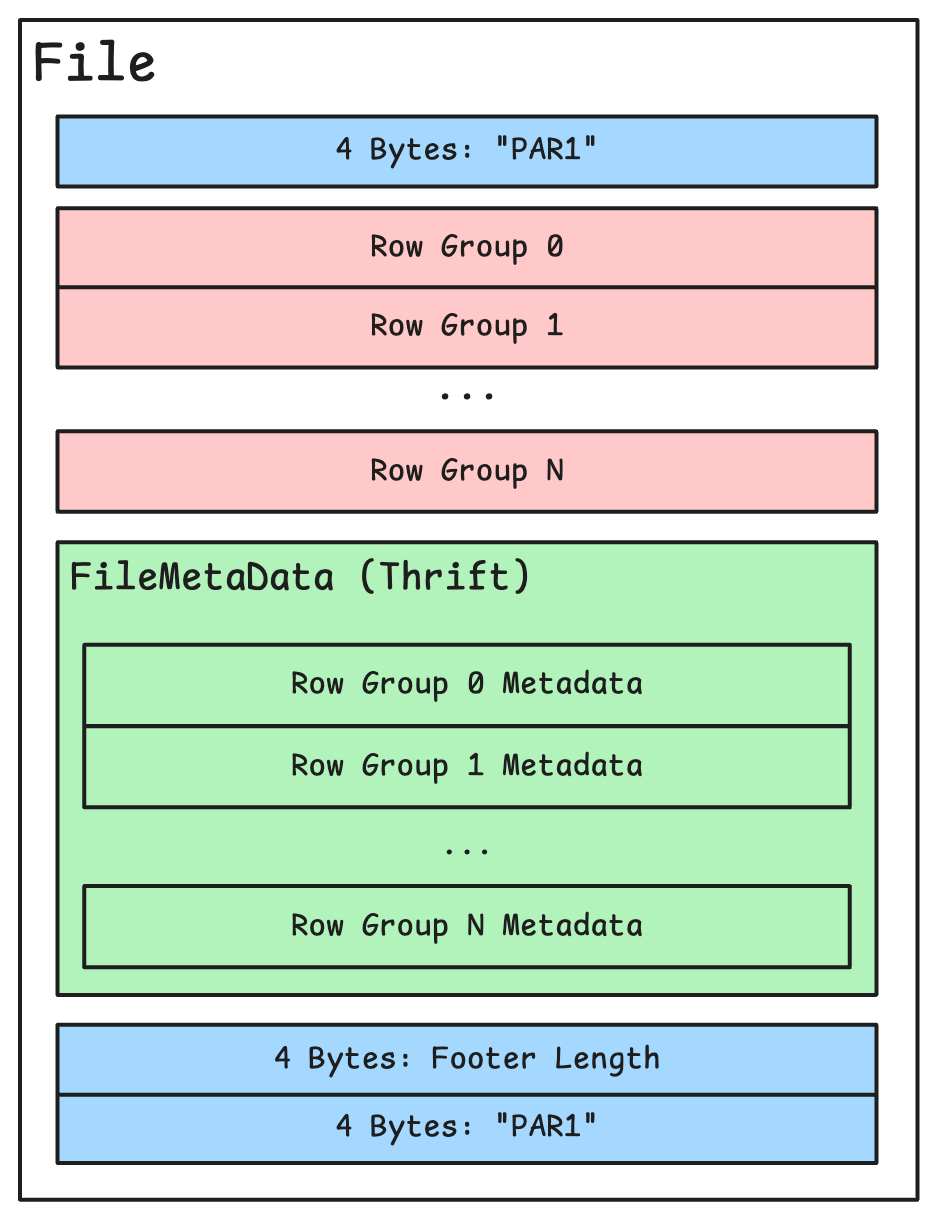

This review is structured around key themes in PINNs: the fundamental components that define their architecture, theoretical aspects of their learning process, and their application to various computing challenges in engineering. The paper also explores the available toolsets, emerging trends, and future directions.

✨ What’s unique

This review paper stands out in the following ways:

- One of the best introductions to PINN fundamentals. This paper takes a well-paced approach to explaining PINNs from the ground up. Section 2 systematically dissects the building blocks of a PINN, covering various underlying neural network architectures and their associated characteristics, how PDE constraints are incorporated, common training methodologies, and learning theory (convergence, error analysis, etc.) of PINNs.

- Putting PINNs in historical context. Rather than simply presenting PINNs as a standalone solution, the paper traces their development from earlier work on using deep learning to solve differential equations. This historical framing is valuable because it helps demystify PINNs by showing that they are an evolution of previous ideas, and it makes it easier for practitioners to see what alternatives are available.

- Equation-driven organization. Instead of just classifying PINN research by scientific domains (e.g., geoscience, material science, etc.) as many other reviews do, this paper categorizes PINNs based on the types of differential equations (e.g., diffusion problems, advection problems, etc.) they solve. This equation-first perspective encourages knowledge transfer as the same set of PDEs could be used across multiple scientific domains. In addition, it makes it easier for practitioners to see the strengths and weaknesses of PINNs when dealing with different types of differential equations.

🛠 Practical goodies

Beyond its theoretical insights, this review paper offers immediately useful resources for practitioners:

- A complete implementation example. In section 3.4, this paper walks through a full PINN implementation to solve a 1D Nonlinear Schrödinger equation. It covers translating equations into PINN formulations, handling boundary and initial conditions, defining neural network architectures, choosing training strategies, selecting collocation points, and applying optimization methods. All implementation details are clearly documented for easy reproducibility. The paper compares PINN performance by varying different hyperparameters, which could offer immediately applicable insights for your own PINN experiments.

- Available frameworks and software tools. Table 3 compiles a comprehensive list of major PINN toolkits, with detailed tool descriptions provided in section 4.3. The considered backends include not only Tensorflow and PyTorch but also Julia and Jax. This side-by-side comparison of different frameworks is especially useful for picking the right tool for your needs.

💡Who would benefit

- This review paper benefits anyone new to PINNs and looking for a clear, structured introduction.

- Engineers and developers looking for practical implementation guidance would find the realistic, hands-on demo, and the thorough comparison of existing PINN frameworks most interesting. Additionally, they can find relevant prior work on differential equations similar to their current problem, which offers insights they can leverage in their own problem-solving.

- Researchers investigating theoretical aspects of PINN convergence, optimization, or efficiency can also greatly benefit from this paper.

2️⃣ From PINNs to PIKANs: Recent Advances in Physics-Informed Machine Learning

📄 Paper at a glance

- Authors: J. D. Toscano, V. Oommen, A. J. Varghese, Z. Zou, N. A. Daryakenari, C. Wu, and G. E. Karniadakis

- Year: 2024

- Link: arXiv

🔍 What it covers

This paper provides one of the most up-to-date overviews of the latest advancements in PINNs. It emphasises enhancements in network design, feature expansion, optimization strategies, uncertainty quantification, and theoretical insights. The paper also surveys key applications across a range of domains.

✨ What’s unique

This review paper stands out in the following ways:

- A structured taxonomy of algorithmic developments. One of the most fresh contributions of this paper is its taxonomy of algorithmic advancements. This new taxonomy scheme elegantly categorizes all the advancements into three core areas: (1) representation model, (2) handling governing equations, and (3) optimization process. This structure provides a clear framework for understanding both current developments and potential directions for future research. In addition, the illustrations used in the paper are top-notch and easily digestible.

- Spotlight on Physics-informed Kolmogorov–Arnold Networks (KAN). KAN, a new architecture based on the Kolmogorov–Arnold representation theorem, is currently a hot topic in deep learning. In the PINN community, some work has already been done to replace the multilayer perceptions (MLP) representation with KANs to gain more expressiveness and training efficiency. The community lacks a comprehensive review of this new line of research. This review paper (section 3.1) exactly fills in the gap.

- Review on uncertainty quantification (UQ) in PINNs. UQ is essential for the reliable and trustworthy deployment of PINNs when tackling real-world engineering applications. In section 5, this paper provides a dedicated section on UQ, explaining the common sources of uncertainty in solving differential equations with PINNs and reviewing strategies for quantifying prediction confidence.

- Theoretical advances in PINN training dynamics. In practice, training PINNs is non-trivial. Practitioners are often puzzled by why PINNs training sometimes fail, or how they should be trained optimally. In section 6.2, this paper provides one of the most detailed and up-to-date discussions on this aspect, covering the Neural Tangent Kernel (NTK) analysis of PINNs, information bottleneck theory, and multi-objective optimization challenges.

🛠 Practical goodies

Even though this review paper leans towards the theory-heavy side, two particularly valuable aspects stand out from a practical perspective:

- A timeline of algorithmic advances in PINNs. In Appendix A Table, this paper tracks the milestones of key advancements in PINNs, from the original PINN formulation to the most recent extensions to KANs. If you’re working on algorithmic improvements, this timeline gives you a clear view of what’s already been done. If you’re struggling with PINN training or accuracy, you can use this table to find existing methods that might solve your issue.

- A broad overview of PINN applications across domains. Compared to all the other reviews, this paper strives to give the most comprehensive and updated coverage of PINN applications in not only the engineering domains but also other less-covered fields such as finance. Practitioners can easily find prior works conducted in their domains and draw inspiration.

💡Who would benefit

- For practitioners working in safety-critical fields that need confidence intervals or reliability estimates on their PINN predictions, the discussion on UQ would be useful. If you are struggling with PINN training instability, slow convergence, or unexpected failures, the discussion on PINN training dynamics can help unpack the theoretical reasons behind these issues.

- Researchers may find this paper especially interesting because of the new taxonomy, which allows them to see patterns and identify gaps and opportunities for novel contributions. In addition, the review of cutting-edge work on PI-KAN can also be inspiring.

3️⃣ Physics-Informed Neural Networks: An Application-Centric Guide

📄 Paper at a glance

- Authors: S. Guo (this author)

- Year: 2024

- Link: Medium

🔍 What it covers

This article reviews how PINNs are used to tackle different types of engineering tasks. For each task category, the article discusses the problem statement, why PINNs are useful, how PINNs can be implemented to address the problem, and is followed by a concrete use case published in the literature.

✨ What’s unique

Unlike most reviews that categorize PINN applications either based on the type of differential equations solved or specific engineering domains, this article picks an angle that practitioners care about the most: the engineering tasks solved by PINNs. This work is based on reviewing papers on PINN case studies scattered in various engineering domains. The outcome is a list of distilled recurring functional usage patterns of PINNs:

- Predictive modeling and simulations, where PINNs are leveraged for dynamical system forecasting, coupled system modeling, and surrogate modeling.

- Optimization, where PINNs are commonly employed to achieve efficient design optimization, inverse design, model predictive control, and optimized sensor placement.

- Data-driven insights, where PINNs are used to identify the unknown parameters or functional forms of the system, as well as to assimilate observational data to better estimate the system states.

- Data-driven enhancement, where PINNs are used to reconstruct the field and enhance the resolution of the observational data.

- Monitoring, diagnostic, and health assessment, where PINNs are leveraged to act as virtual sensors, anomaly detectors, health monitors, and predictive maintainers.

🛠 Practical goodies

This article places practitioners’ needs at the forefront. While most existing review papers merely answer the question, “Has PINN been used in my field?”, practitioners often seek more specific guidance: “Has PINN been used for the type of problem I’m trying to solve?”. This is precisely what this article tries to address.

By using the proposed five-category functional classification, practitioners can conveniently map their problems to these categories, see how others have solved them, and what worked and what did not. Instead of reinventing the wheel, practitioners can leverage established use cases and adapt proven solutions to their own problems.

💡Who would benefit

This review is best for practitioners who want to see how PINNs are actually being used in the real world. It can also be particularly valuable for cross-disciplinary innovation, as practitioners can learn from solutions developed in other fields.

4️⃣ An Expert’s Guide to Training Physics-informed Neural Networks

📄 Paper at a glance

- Authors: S. Wang, S. Sankaran, H. Wang, P. Perdikaris

- Year: 2023

- Link: arXiv

🔍 What it covers

Even though it doesn’t market itself as a “standard” review, this paper goes all in on providing a comprehensive handbook for training PINNs. It presents a detailed set of best practices for training physics-informed neural networks (PINNs), addressing issues like spectral bias, unbalanced loss terms, and causality violations. It also introduces challenging benchmarks and extensive ablation studies to demonstrate these methods.

✨ What’s unique

- A unified “expert’s guide”. The main authors are active researchers in PINNs, working extensively on improving PINN training efficiency and model accuracy for the past years. This paper is a distilled summary of the authors’ past work, synthesizing a broad range of recent PINN techniques (e.g., Fourier feature embeddings, adaptive loss weighting, causal training) into a cohesive training pipeline. This feels like having a mentor who tells you exactly what does and doesn’t work with PINNs.

- A thorough hyperparameter tuning study. This paper conducts various experiments to show how different tweaks (e.g., different architectures, training schemes, etc.) play out on different PDE tasks. Their ablation studies show precisely which methods move the needle, and by how much.

- PDE benchmarks. The paper compiles a suite of challenging PDE benchmarks and offers state-of-the-art results that PINNs can achieve.

🛠 Practical goodies

- A problem-solution cheat sheet. This paper thoroughly documents various techniques addressing common PINN training pain-points. Each technique is clearly presented using a structured format: the why (motivation), how (how the approach addresses the problem), and what (the implementation details). This makes it very easy for practitioners to identify the “cure” based on the “symptoms” observed in their PINN training process. What’s great is that the authors transparently discussed potential pitfalls of each approach, allowing practitioners to make well-informed decisions and effective trade-offs.

- Empirical insights. The paper shares valuable empirical insights obtained from extensive hyperparameter tuning experiments. It offers practical guidance on choosing suitable hyperparameters, e.g., network architectures and learning rate schedules, and demonstrates how these parameters interact with the advanced PINN training techniques proposed.

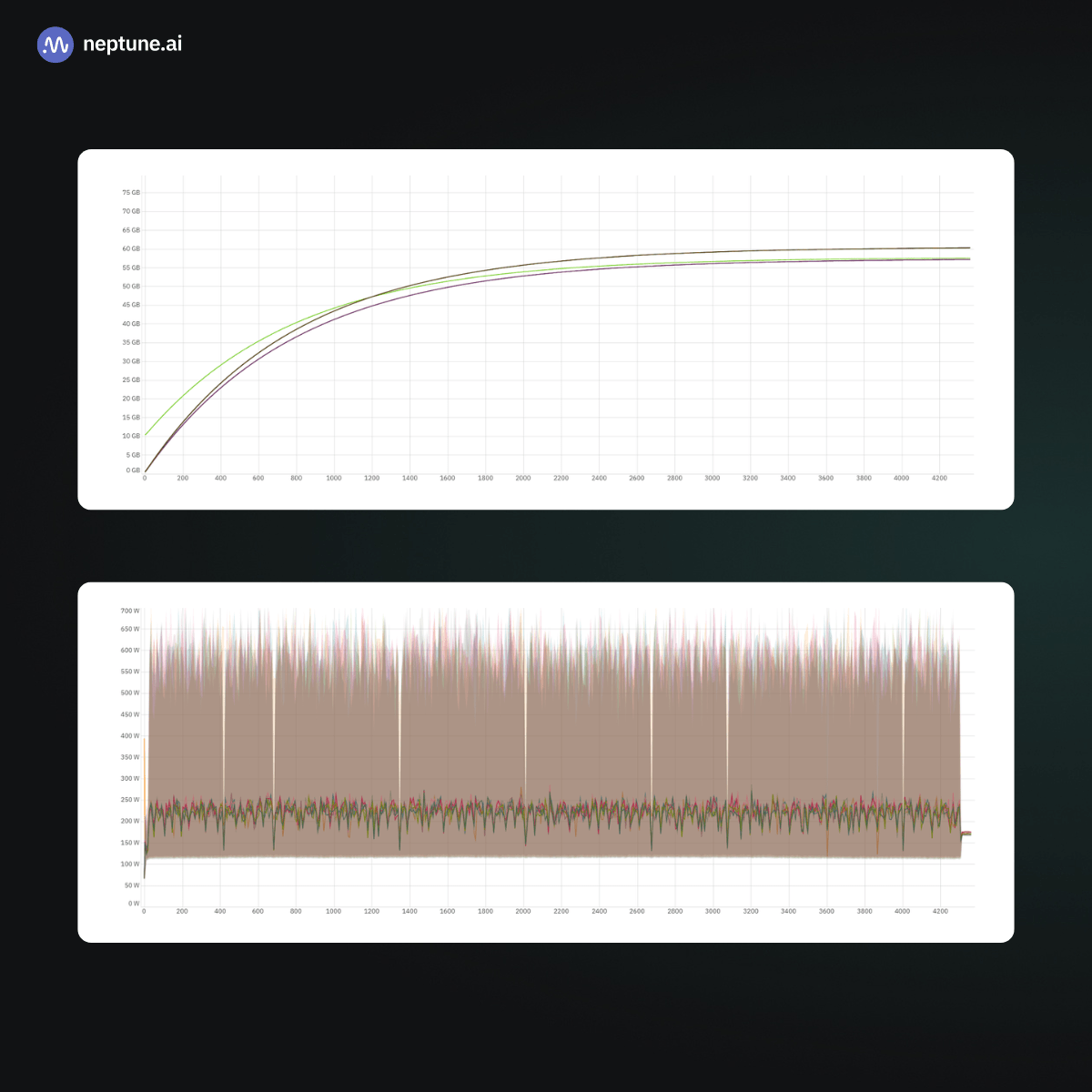

- Ready-to-use library. The paper is accompanied by an optimized JAX library that practitioners can directly adopt or customize. The library supports multi-GPU environments and is ready for scaling to large-scale problems.

💡Who would benefit

- Practitioners who are struggling with unstable or slow PINN training can find many practical strategies to fix common pathologies. They can also benefit from the straightforward templates (in JAX) to quickly adapt PINNs to their own PDE setups.

- Researchers looking for challenging benchmark problems and aiming to benchmark new PINN ideas against well-documented baselines will find this paper especially handy.

5️⃣ Domain-Specific Review Papers

Beyond general reviews in PINNs, there are several nice review papers that focus on specific scientific and engineering domains. If you’re working in one of these fields, these reviews could provide a deeper dive into best practices and cutting-edge applications.

1. Heat Transfer Problems

Paper: Physics-Informed Neural Networks for Heat Transfer Problems

The paper provides an application-centric discussion on how PINNs can be used to tackle various thermal engineering problems, including inverse heat transfer, convection-dominated flows, and phase-change modeling. It highlights real-world challenges such as missing boundary conditions, sensor-driven inverse problems, and adaptive cooling system design. The industrial case study related to power electronics is particularly insightful for understanding the usage of PINNs in practice.

2. Power Systems

Paper: Applications of Physics-Informed Neural Networks in Power Systems — A Review

This paper offers a structured overview of how PINNs are applied to critical power grid challenges, including state/parameter estimation, dynamic analysis, power flow calculation, optimal power flow (OPF), anomaly detection, and model synthesis. For each type of application, the paper discusses the shortcomings of traditional power system solutions and explains why PINNs could be advantageous in addressing those shortcomings. This comparative summary is useful for understanding the motivation for adopting PINNs.

3. Fluid Mechanics

Paper: Physics-informed neural networks (PINNs) for fluid mechanics: A review

This paper explored three detailed case studies that demonstrate PINNs application in fluid dynamics: (1) 3D wake flow reconstruction using sparse 2D velocity data, (2) inverse problems in compressible flow (e.g., shock wave prediction with minimal boundary data), and (3) biomedical flow modeling, where PINNs infer thrombus material properties from phase-field data. The paper highlights how PINNs overcome limitations in traditional CFD, e.g., mesh dependency, expensive data assimilation, and difficulty handling ill-posed inverse problems.

4. Additive Manufacturing

Paper: A review on physics-informed machine learning for monitoring metal additive manufacturing process

This paper examines how PINNs address critical challenges specific to additive manufacturing process prediction or monitoring, including temperature field prediction, fluid dynamics modeling, fatigue life estimation, accelerated finite element simulations, and process characteristics prediction.

6️⃣ Conclusion

In this blog post, we went through a curated list of review papers on PINNs, covering fundamental theoretical insights, the latest algorithmic advancements, and practical application-oriented perspectives. For each paper, we highlighted unique contributions, key takeaways, and the audience that would benefit the most from these insights. I hope this curated collection can help you better navigate the evolving field of PINNs.

![Understanding LLMs Requires More Than Statistical Generalization [Paper Reflection]](https://exeltek.com.au/wp-content/uploads/2025/03/Inductive-biases-influence-model-performance.png)